Status

We were able to establish a complete system. This system consists of three ESP32's. We have one ESP32 generating data as an access point, we have a second ESP32 harvesting data from that access point, and finally we have a third ESP32 taking the collected data and sending it to our ETG server.

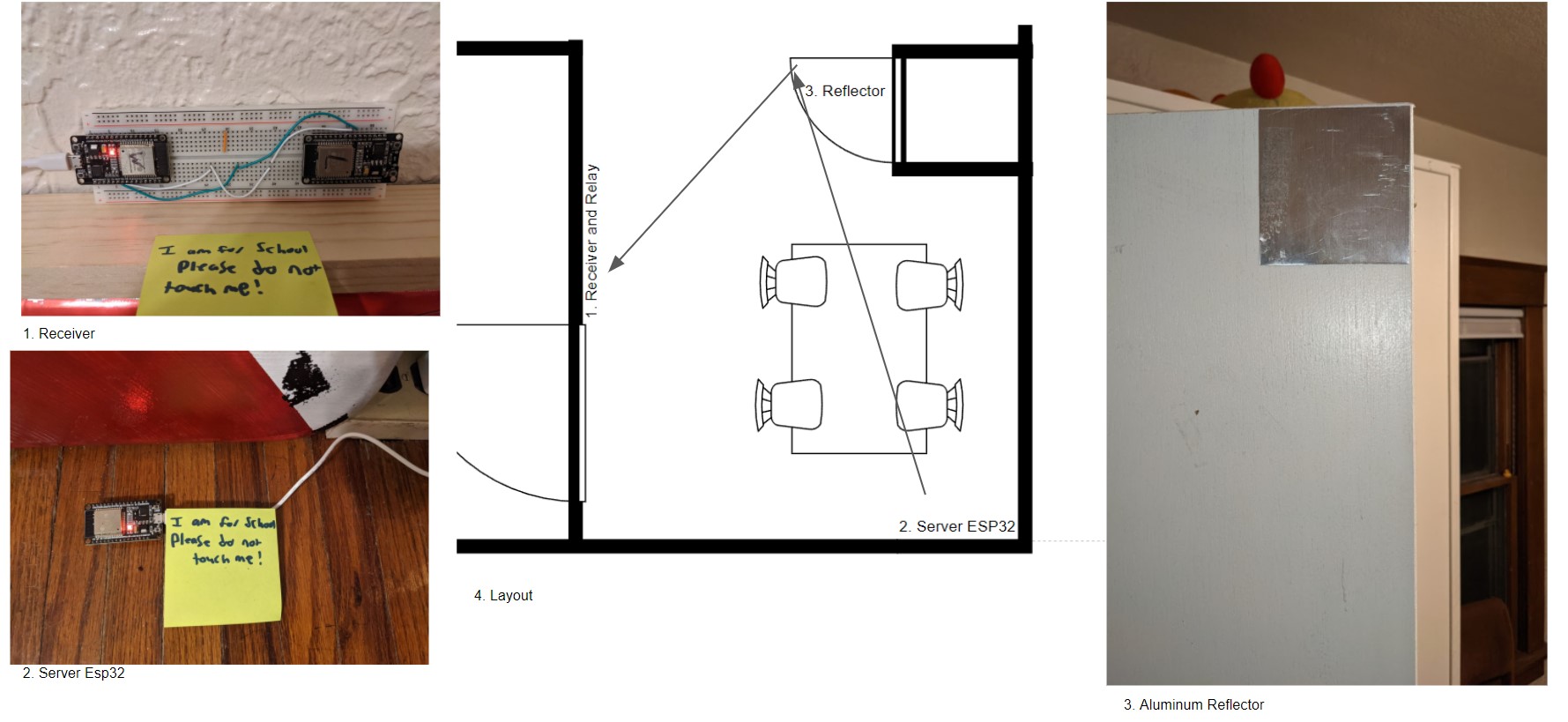

Figure1 : ESP32 Setup

This setup nets us about 3 data points a second, plenty for our purpose. This data is then passed to a NGINX proxy, and then on to our ISU server. This server is running several applications, the most prominent of which is a python flask server acting as an API between the other applications and the external apps.

Figure2 : System Diagram

With this setup we are able to successfully collect, foward, analyze, and report data. It's beautfiful, and feels good to have a complete, functional system.

Problems

The only problem is that this system fails almost every aspect of our test plan. The system is slow, with a throughput of 10+ seconds per inference, not great when we need to be able to report an open door within 5 seconds. Once we do recieve an inference it is wildly inaccurate. Thus far we have a ~12% accuracy. Note that the accuracy of a random guess would be expected to be 16%.

Solutions

We have proposed solutions to these issues:

Speed

We intend to switch from a process based server setup to a thread based solution. We hope this will help with scheduling and resource based bottle necks. Inference is near instantaneous on our server when not runnig the flask server, so we know we have the power to inference on the server.

Accuracy

We have two proposed solutions to increase accuracy.

The first is to improve our machine learning algorithim. This is outlined here: ML.pdf

Secondly we have a proposed solution that essentially replaces the ML aspect with a custom built algorithim: Algo.pdf